Fighting the tech-centric glorification of Generative AI

I cannot stress enough how important it is to fight against the tech-centric glorification of Generative AI.

Allow me to present an example for your consideration, in relation to Large Language Models (LLMs).

I recently watched a video on LinkedIn Learning to do with using the OpenAI Assistants API. For the record, I think it’s a great tool, and a great way to get started with RAG (Retrival Augmented Generation) integrated with Large Language Models. I am not anti-LLM (apart from when I need to be!)

An example presented in the video was a simple maths assistant. The code ran fine, a question was sent via the API, and a response returned.

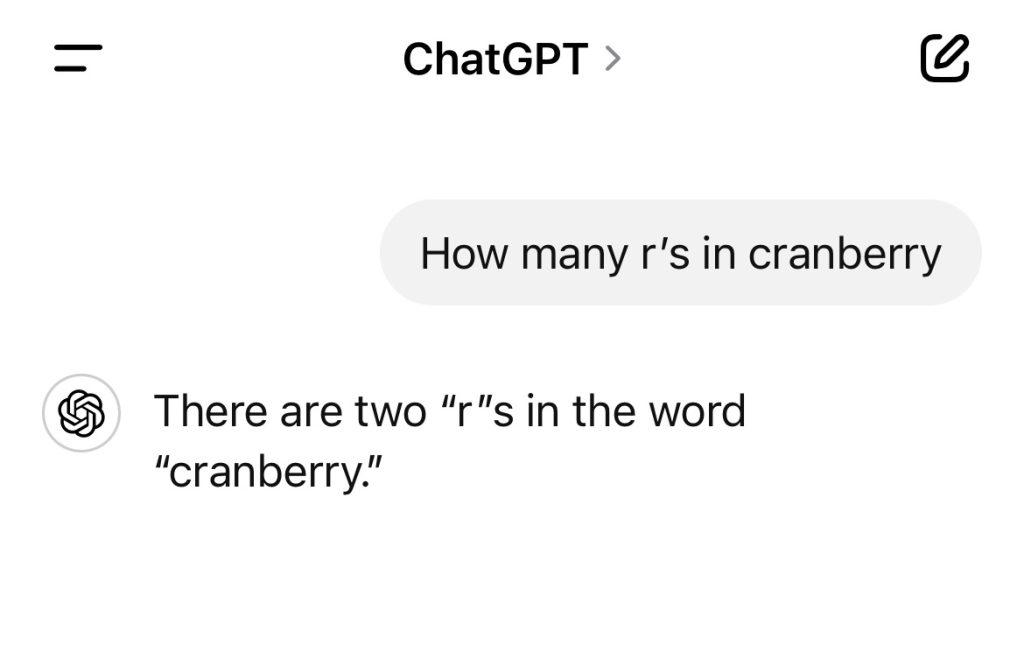

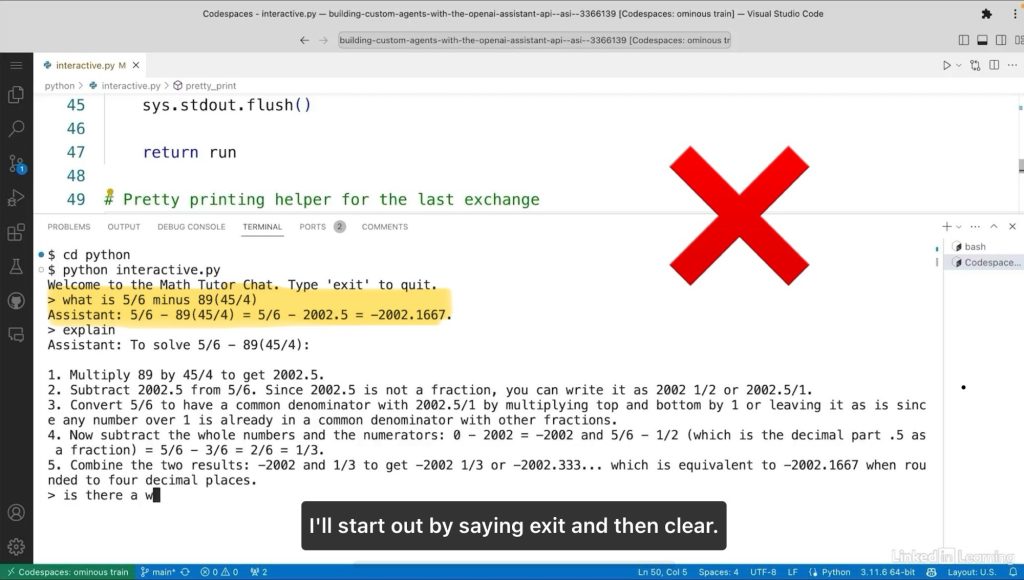

Cool demo. Nice code. But hang on. Is that maths right? (See figure 1)

I had to check several times on my phone calculator and even ask the latest version of ChatGPT (oh the irony!) to finally be sure that, no, it wasn’t right. (See figure 2)

But this mistake was ignored in the video. Accuracy and fundamental respect for truth wasn’t even a consideration. No acknowledgement that it was capable of mistakes.

Yes, later versions of ChatGPT can answer this kind of question correctly. Progress, for sure. But the more accurate these models get, without (ever) achieving 100% accuracy, the higher risk of automation bias / automation complacency.

Stop. Think. Engage brain. Be critical. Maintain scepticism. Most importantly, stay safe.

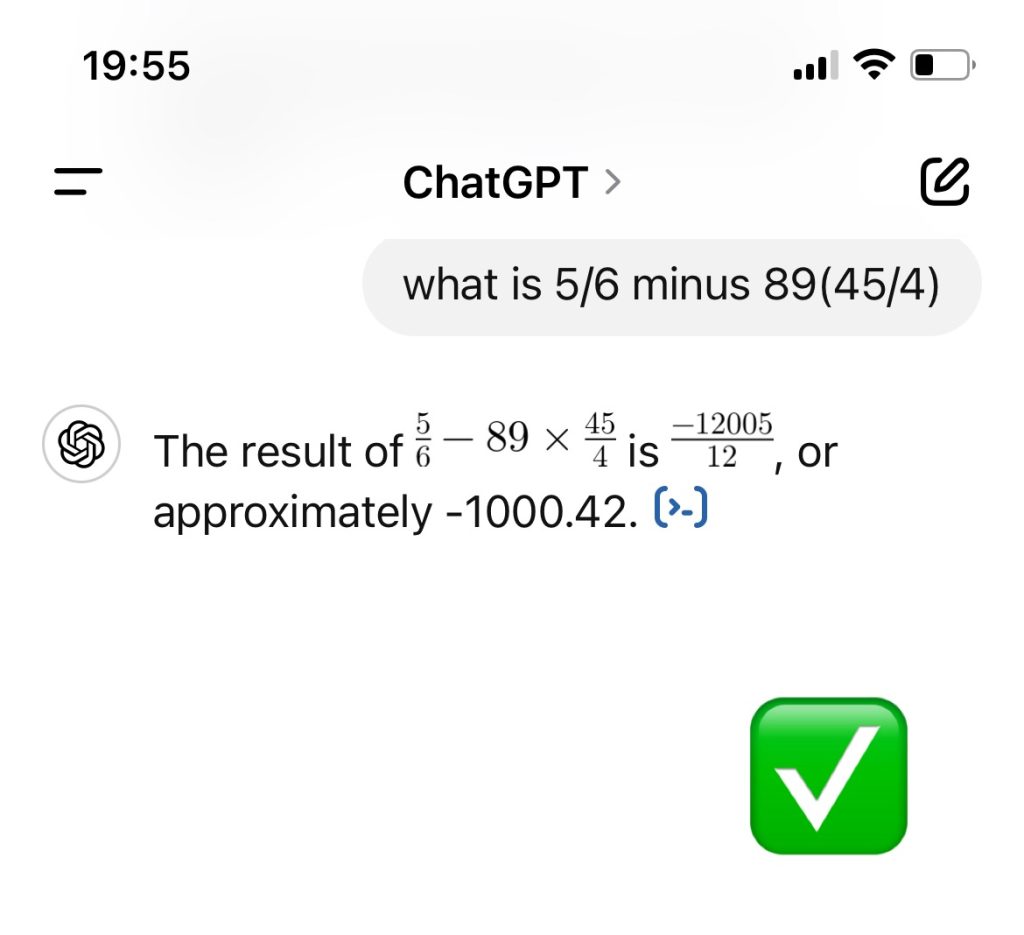

And if you need a giggle, just ask ChatGPT how many r’s are in cranberry!